Scientists develop completely mobile Virtual Reality tools to be used anywhere

Two new projects from the Sensing, Interaction & Perception Lab of Professor Christian Holz advance the versatility of today’s Virtual Reality (VR) platforms, taking advantage of common devices such as a fitness watch or a smartphone. By developing novel AI frameworks, they allow VR users to freely interact in 3D space and grab objects that are out of sight, or even play in places as small as a car, a train or an airplane seat.

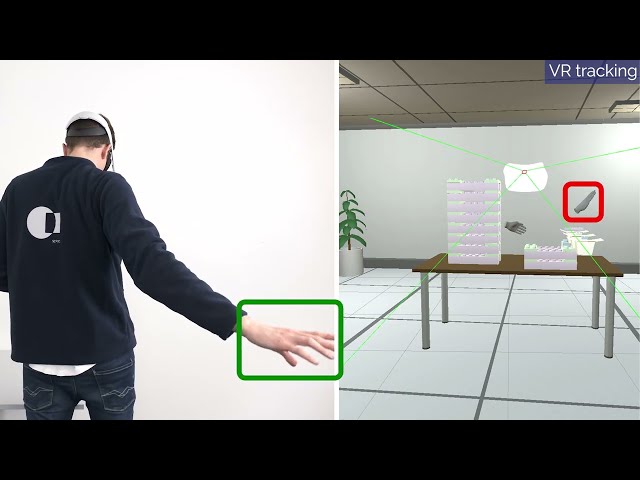

HOOV: Hand Out-Of-View interaction for Virtual Reality

In their first project, the researchers developed an AI method that mimics one of our most important senses: proprioception. Even if you close your eyes, you can easily touch your nose, or bring a glass of water to your mouth. How about scratching the back of your head or tying a ponytail? This is possible thanks to the sense of proprioception, the ability to sense where our limbs are in space.

Virtual (VR) and Augmented Reality (AR) devices, in contrast, rely only on visual information to reconstruct the space around the person. This means that when using VR googles, we can only interact with objects directly in front of us and need to constantly look at our hands. This is impractical and considerably limits the usability of VR. A simple virtual bow and arrow game, where the hand pulling the string quickly leaves the user’s view, or games involving swords, tennis rackets or water paddles cannot be reproduced well. While big VR manufacturers have now embedded cameras into their controllers to mitigate this challenge, such hardware changes add system complexity, computation, and costs.

The team led by Professor Christian Holz has developed a simpler, less expensive and more efficient solution: HOOV, or “Hand Out-Of-View” tracking of proprioceptive interactions. Their novel machine learning-based method uses a lightweight wristband to continuously estimate the user’s hand position in 3D, using input signals from a simple motion sensor that is already embedded in all fitness and smartwatches. It allows users to efficiently perform small tasks around their body without having to turn their heads to look at their hands.In their first project, the researchers developed an AI method that mimics one of our most important senses: proprioception. Even if you close your eyes, you can easily touch your nose, or bring a glass of water to your mouth. How about scratching the back of your head or tying a ponytail? This is possible thanks to the sense of proprioception, the ability to sense where our limbs are in space.

“HOOV brings us one step closer to interacting in VR much like we would in the real world, without requiring additional instrumentation or complex stationary setups.”Christian Holz, Professor and Head of the Sensing, Interaction & Perception Lab

Paul Streli, the lead doctoral student on the project, explains: “We are tackling a long-standing challenge in robotics to infer 3D locations from noisy inertial motion signals. HOOV’s computational pipeline achieves this by leveraging the kinematic constraints of the human body.”

HandyCast: mobile bimanual input for Virtual Reality in space-constrained settings

In a second project, the researchers address a different challenge: today’s VR systems are designed for stationary use, typically at home in empty environments.

With HandyCast, Professor Holz’s team makes the use of VR possible anywhere and enables users to interact with both hands in large virtual spaces no matter where they are, even in the tiniest spaces such as an airplane seat, where standing or waving one’s arms around is not convenient or possible.

The key component of the researchers’ novel method is the use of a smartphone as a controller for Virtual Reality. HandyCast transforms the small motions of the smartphone and combines them with fine-tuned touches into the 3D position of virtual hands. The technology builds on the observation that users naturally embody their actions when holding a controller, leaning into a direction to seemingly produce stronger input or pushing buttons harder.

"Our research shows that these natural body motions are accurate enough for HandyCast’s amplification to allow fine-grained control over two virtual hands at the same time, while using only a single phone", explains Christian Holz.

Mohamed Kari, the doctoral student in charge of the project, adds that “Despite the fact that our pose and touch transfer fuses input from two modalities, we designed our amplification function such that users are capable of learning to control the two independent VR hand avatars in a joint and smooth motion. Learning this took most participants less than a minute.”

ACM CHI conference

The two projects, HOOV and Handy Cast, were presented on Wednesday, 26 April by students Paul Streli (HOOV) and Mohamed Kari (HandyCast) at this year’s ACM CHI conference, one of the largest international conferences in the field of Human-Computer Interaction.