Researchers at ETH Zurich have developed an AI touchscreen with super-resolution

Researchers from the Sensing, Interaction & Perception Lab have developed CapContact, an AI solution that enables touchscreens to sense with eight times higher resolution than currently existing devices.

While the displays in our smartphones have been developed to have ever more pixels and brilliant colours, the touch sensors we use to provide input to our devices has not changed for decades. Doctoral student Paul Streli and Professor Christian Holz from the SIPLAB at the Department of Computer Science have now developed an AI that gives touchscreens super-resolution.

Professor Holz, Mr Streli, you used AI to make the touchscreens we use every day much more accurate. What gave you the idea?

Christian Holz: Going beyond the purpose of touchscreens to provide mere (x,y) touch coordinates, we had previously explored the use of touchscreens as image sensors. A touchscreen is essentially a very low-resolution depth camera that can only see about eight millimetres far, so we used this capability of our phones to scan fingers, knuckles, and hands to recognise users. And it was during that project that we noticed that the real challenge is to precisely detect the parts of the finger and hand that are actually in contact with the surface. So we aimed to address both challenges at once, the low resolution of these sensors and the accurate detection of contact areas, which is what led to our current project external page CapContact.

Why is detecting actual contact areas important on our smartphones?

Christian Holz: If you look at phone displays, they now provide unprecedented visual quality. And with every new generation of phones, we could clearly see the difference: higher colour fidelities, higher resolutions, crisper contrast. But what few people might know is that the touch sensors in our phones have not changed much since they first came out in the mid-2000s. Take the latest iPhone, for example: It has a display resolution of 2532x1170 pixels, but the touch sensor itself can only resolve input with a resolution of around 32x15 pixels – that’s almost 80 times lower than what we see on the screen. And here we are, wondering why we make so many typos on the small keyboard? We think that we should be able to select objects with pixel accuracy through touch, but that’s certainly not the case.

“Phone displays now provide unprecedented visual quality. But the touch sensors in our phones have not changed much since they first came out.”Professor Christian Holz

How did you improve the touch functionality?

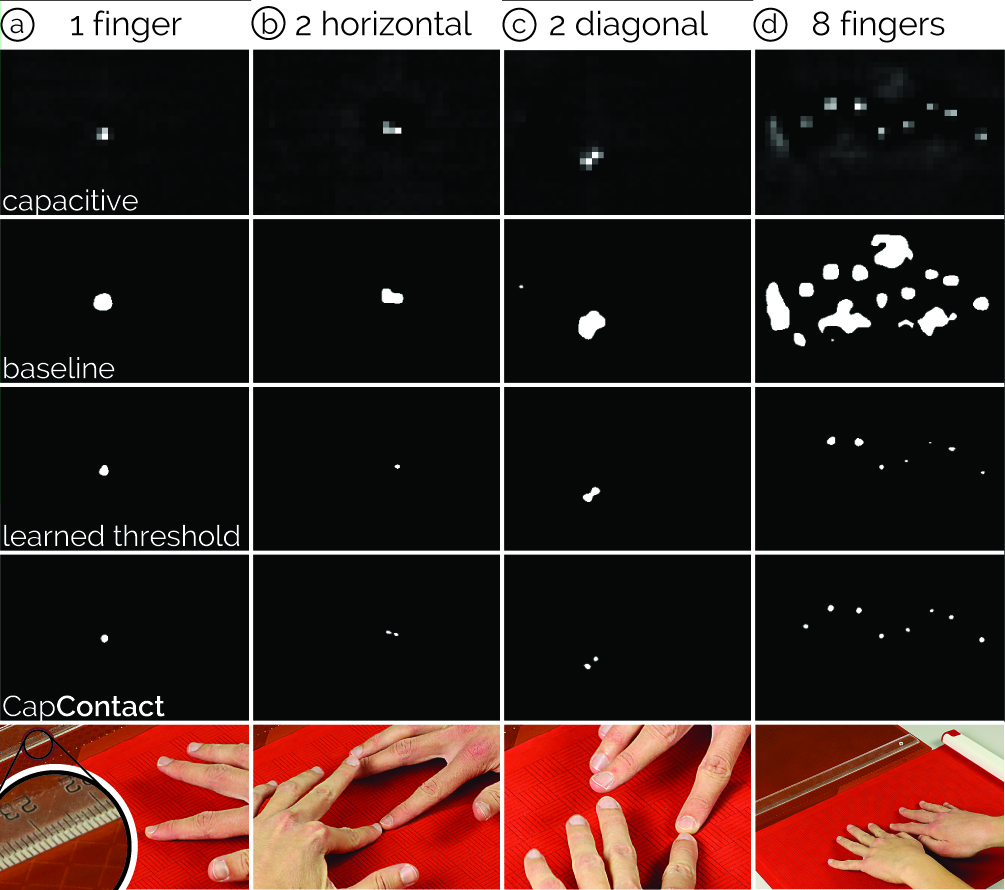

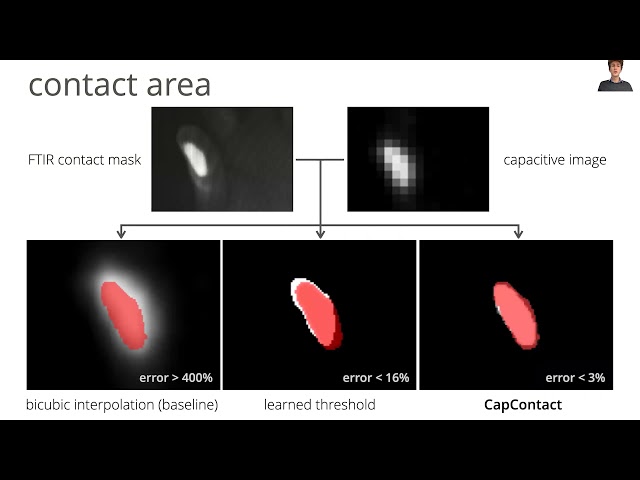

Christian Holz: The challenge is that the touch sensing modality – capacitive sensing – was never designed to infer actual contact; it only detects the proximity of our fingers. From these coarse proximity measurements, today’s touch devices interpolate an input location. With CapContact, our deep neural network-based method, we now bridge this gap with two main benefits. First, CapContact estimates actual contact areas between fingers and touchscreens upon touch. Second, CapContact generates these contact areas at eight times the low resolution of current touch sensors, enabling our touch devices to detect touch much more precisely.

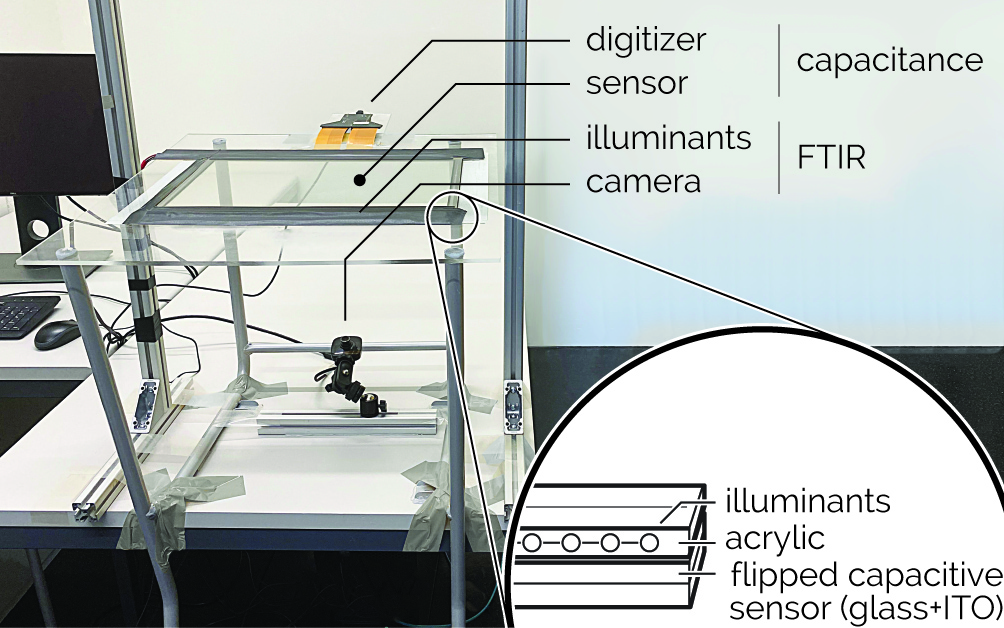

To train CapContact’s network, we developed a custom apparatus that integrates and simultaneously records capacitive intensities and true contact maps through a high-resolution pressure sensor. We captured many touches from a series of participants to record a dataset, from which CapContact learned to predict super-resolution contact masks using the coarse and low-resolution raw sensor values of today’s touch devices. And CapContact can now bring this super-resolution capacitive sensing to consumers’ smartphones and tablets.

How do users benefit from this super-resolution touch sensing?

Paul Streli: In our paper, we show that from the contact area between your finger and a smartphone’s screen as estimated by CapContact, we can derive touch-input locations with higher accuracy than current devices do. In fact, we show that one-third of errors on current devices are due to the low-resolution input sensing. And CapContact can remove that part of the error through our deep learning implementation, which manufacturers could deploy through a software update. We also show that CapContact can reliably distinguish adjacent touches where current devices fail, such as during a pinch gesture where the thumb and index finger are close together.

What’s the advantage for device manufacturers?

Paul Streli: Because we showed that CapContact super-resolves touch sensing, we conducted an experiment using an even lower-resolution sensor to evaluate its performance. Instead of the 32x15 sensor pixels on your iPhone, we used half as many: 16x8, which is almost ridiculously low. And yet, we demonstrated that CapContact detects touches and derives input locations with higher accuracy than current devices do at the regular resolution. This indicates that using our method, future devices could save sensing complexity and manufacturing costs in hardware, and use CapContact’s advanced signal processing in software to still deliver higher reliability and accuracy. That’s why we’re releasing the model and code as open source as well as the dataset we recorded for future research in the community.

What would it take for CapContact to hit the market?

Christian Holz: We see promise in the recent developments of mobile devices that integrate neural processing units for on-device machine learning, for example in the latest iPhones and Android phones and tablets. CapContact sits between the sensing layer and the application layer and these neural processors are optimally suited to run CapContact on the raw touch signals, which creates an opportunity for device manufacturers to adopt our method for better capacitive touch sensing and to deliver a better user experience.

What are the next steps for the project?

Christian Holz: We will keep exploring CapContact’s potential in very low-resolution touch sensing applications given the numerous hardware benefits in terms of lower complexity and the gains in user experience. For example, we deployed CapContact on Microsoft’s Project Zanzibar device, a 21” portable and flexible mat that supports interaction with touch and tangible objects and that happens to have a much lower-resolution sensor than current smartphones. But using CapContact, we’re bringing fully-fledged, fine-granular touch interaction to such larger form factor devices, and we’re now exploring the resulting opportunities.

By the way, we’re always interested in working with students who are excited about the integration of embedded devices, machine learning, and interactive user experiences. In the Sensing, Interaction & Perception Lab, we explore the interface between humans and technology and work at the intersection of hardware and software systems. For more information, check out our external page website.

Paul Streli and Christian Holz have published the results of their research in a paper titled “CapContact: Super-resolution Contact Areas from Capacitive Touchscreens”, which appeared at ACM CHI 2021 this week and received a Best Paper Award.